In Short

- The Wafer-Scale Engine from Cerebras has proven to be faster than Groq at generating AI inference.

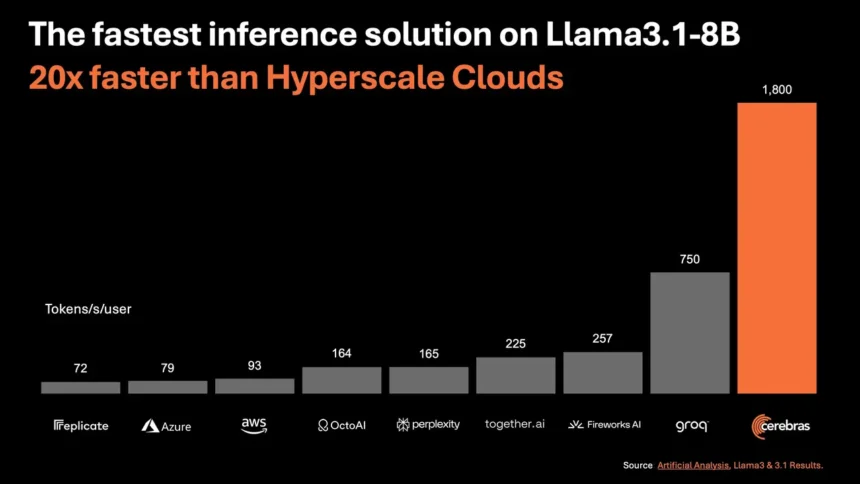

- With the 8B model, Cerebras Inference can process up to 1,800 tokens per second; with the 70B model, it can process 450 tokens per second.

- In contrast, Groq can operate with 8B and 70B models at up to 750 T/s and 250 T/s, respectively.

Cerebras AI Inference has finally opened access to its Wafer-Scale Engine (WSE), and it is able to infer the Llama 3.1 8B model at a rate of 1,800 tokens per second. Cerebras is capable of achieving 450 tokens per second with the bigger Llama 3.1 70B variant. Prior to Cerebras, Groq held the title of quickest AI inference supplier.

Introducing Cerebras Inference

— Cerebras (@CerebrasSystems) August 27, 2024

‣ Llama3.1-70B at 450 tokens/s – 20x faster than GPUs

‣ 60c per M tokens – a fifth the price of hyperscalers

‣ Full 16-bit precision for full model accuracy

‣ Generous rate limits for devs

Try now: https://t.co/50vsHCl8LM pic.twitter.com/hD2TBmzAkw

Cerebras has developed its own wafer-scale processor that integrates close to 900,000 AI-optimized cores and packs 44GB of on-chip memory (SRAM). As a result, the AI model is directly stored on the chipset itself, unlocking groundbreaking bandwidth. Not to mention, Cerebras is running Meta’s full 16-bit precision weights meaning there is no compromise on accuracy.

Cerebras has set a new record for AI inference speed, serving Llama 3.1 8B at 1,850 output tokens/s and 70B at 446 output tokens/s.@CerebrasSystems has just launched their API inference offering, powered by their custom wafer-scale AI accelerator chips.

— Artificial Analysis (@ArtificialAnlys) August 27, 2024

Cerebras Inference is… pic.twitter.com/WWkTGy1qpE

When I put Cerebras’ claim to the test, it produced an answer rather quickly. With the Llama 3.1 8B model, which is smaller, it ran at 1,830 tokens per second. Cerebras also managed 446 tokens per second on the 70B model. By contrast, Groq ran 8B and 70B models at 750 T/s and 250 T/s, respectively.

Related News

- Twisters Streaming Release Date and Where to Watch

- Google Gemini and WhatsApp Messages Will Soon Be Integrated

- Miss Wednesday Actor for One Piece Live Action Season 2, Netflix Reveals

Cerebras’s WSE engine was independently assessed by Artificial Analysis, which concluded that it does really offer unmatched speed at AI inference. To see Cerebras Inference for yourself, follow this link.